Serviços Personalizados

Artigo

Links relacionados

Compartilhar

Revista da ABENO

versão impressa ISSN 1679-5954

Rev. ABENO vol.16 no.3 Londrina Jul./Set. 2016

Augmented reality as a new perspective in Dentistry: development of a complementary tool

Realidade aumentada como uma nova perspectiva em Odontologia: desenvolvimento de uma ferramenta complementar

Glaucia Nize Martins SantosI; Everton Luis Santos da RosaII; André Ferreira LeiteII; Paulo Tadeu de Souza FigueiredoII; Nilce Santos de MeloII

I Department of Dentistry, Health Sciences Faculty, University of Brasília, Brasília, Brazil

II Oral and maxillofacial surgeon, Hospital de Base of Federal District, Brazil

ABSTRACT

The purpose of this study is to introduce a visualization and interaction tool of Augmented Reality in mobile devices using three-dimensional (3D) volumetric images from patients' real tomographic acquisition, and to describe the steps for preparing the models for such 3D visualizations. Augmented Reality was built correlating tomographic images and open-source software, in a sequence of (1) image acquired, that consists of multi-planar images that can be visualized as 3D renderings and are the basis for constructing polygonal surfaces of specific anatomic structures of interest, (2) creation of volumetric models, in which 3D volumetric model can be saved and exported as a 3D polygonal mesh in .stl file format, (3) model simplification, which must be done in order to simplify the matrix of polygonal surfaces, and reduce models' megabytes, and (4) create the augmented reality project. Once these procedures are performed, the augmented reality project can be saved and visualized in mobile devices. The volumetric model from a computed tomography acquisition is available in any mobile device screen, superimposed on a marker. This approach facilitates the visualization of the model, giving the precise location of structures and abnormalities, as supernumerary teeth, bone fractures and asymmetries. Also, the model is saved for future and multiple visualization. Augmented reality application is a new perspective in dentistry although it is in an early phase. It can be created by integrating multiple technologies and has a great potential to support learning and teaching, and improve how 3D models from medical images are seen.

Descriptors: Augmented Reality. Dentistry. Digital Image. Education. Radiology.

RESUMO

O objetivo desse trabalho é introduzir uma ferramenta de visualização e interação baseada em realidade aumentada (RA) em dispositivos móveis utilizando imagens volumétricas em três dimensões (3D) a partir de aquisições tomográficas reais de pacientes, e descrever os passos para o preparo dos modelos para tais visualizações tridimensionais. A RA foi construída correlacionando imagens tomográficas e programas de computador livres, na seguinte sequência: (1) imagem adquirida, que consiste em imagens mutiplanares que podem ser visualizadas como renderizações 3D e são a base para a construção de superfícies poligonais de estruturas anatômicas específicas de interesse, (2) criação dos modelos volumétricos, passo no qual o modelo 3D pode ser salvo e exportado como uma malha poligonal 3D em formato de arquivo .stl, (3)simplificação do modelo, que deve ser executada com a finalidade de simplificar a matriz de superfícies poligonais e consequentemente reduzir os megabytes do modelo, e (4) criação do projeto de realidade aumentada. Essa abordagem facilita a visualização do modelo tomográfico, dando a localização precisa de estruturas e anormalidades, como dentes supranumerários, fraturas ósseas e assimetrias. Além disso, o referido modelo pode ser salvo para múltiplias visualizações futuras. A aplicação da realidade aumentada é uma nova perspectiva em Odontologia apesar de estar em fase inicial. Pode ser criada integrando múltiplas tecnologias e apresenta grande potencial para auxiliar o ensino e a aprendizagem, e para melhorar a forma como modelos 3D originados de imagens médicas são visualizados.

Descritores: Realidade Aumentada. Odontologia. Imagem Digital. Radiologia.

1 INTRODUCTION

Nowadays, one of the most relevant technological tools available in our information-driven society is augmented reality, which has been developed and applied in several fields such as Architecture, Advertising, Archeology, Marketing, Military and Leisure, and also has a promising future in Health Sciences, including Dentistry, especially in oral and maxillofacial surgery, orthodontics, oral radiology and dental education.1,2 Augmented reality (AR) can be defined as enhancing an individual's visual experience with the real world through the integration of digital visual elements. It is characterized by a combination of real and virtual scenes registered in three dimensions, with the possibility of interaction in real time. Therefore, an augmented reality environment allows the user to see the real world with virtual computer-generated objects superimposed or merged with real surroundings.3-6

The advent of computed tomography (CT) and magnetic resonance imaging (MRI) enabled the acquisition of three-dimensional (3D) volumetric images of a patient's body, created from the multi-planar images in DICOM (digital imaging and communications in Medicine) format. Volumetric models are obtained and usually visualized and manipulated in specific DICOMreader software.7 In Dentistry, the use of cone beam computed tomography (CBCT) is well defined, particularly for tooth localization, facial asymmetries, fractures and pathology diagnosis.8,9 More effective and rational clinical decision-making for orthodontic and orthognathic surgery patients, for example, requires careful 3D image analysis techniques.10 The idea of integrating patients' volumetric models and AR can contribute to faster and easier visualization of abnormalities, localizations and anatomic points of interest.11 Mobile devices, particularly smartphones and tablet devices, are an ideal platform for AR technology.

In addition, learning based on performing experiments and further reflection on their results is the basis of experiential learning, widely used in dentistry, as it is partially a practice training course. A key determinant of the effectiveness of experiential learning is interactivity.12 As far as learning content is concerned, interactivity is defined as: "the extent to which users can participate in modifying the form and content of a mediated environment in real time".13 One of the most promising technologies is augmented reality, as the coexistence of virtual objects and real environments allows individuals to visualize complex spatial relationships and abstract concepts, and interact with two- and three-dimensional synthetic objects in the mixed reality.14 It has been demonstrated that virtual learning applications may provide adequate tools that allow users to learn in a quick and efficient way, interacting with virtual environments.15

This article introduces a modern visualization and interaction tool of AR in mobile devices using 3D volumetric images from patients' real tomographic acquisition, and describes the steps for preparing the models for such 3D visualizations. Also, to provide insights into opportunities offered by AR, the purpose of this article is to present the advantages, challenges and perspectives of AR in dentistry.

2 MATERIALS AND METHODS

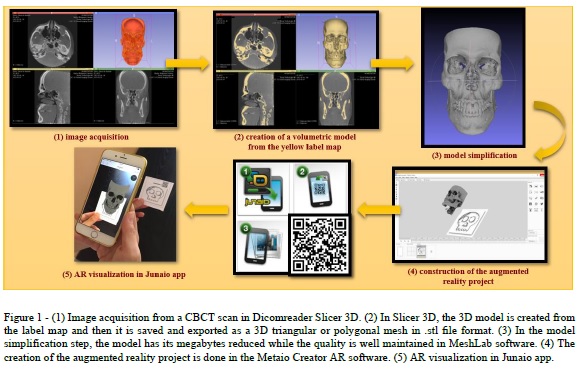

AR using tomographic volumetric models can be built by following these steps (figure 1): (1) import or access previously acquired image, (2) creation of volumetric models, (3) model simplification, and (4) create the augmented reality project. Once these procedures have been carried out, the AR project can be saved and visualized in mobile devices.

In order to prepare 3D volumes, data coming from CBCT image acquisition will be described, since it is widely used in dentistry. A CBCT scan in DICOM format with a protocol of choice can be used, regarding field-of-view (FOV) and slice thickness. These multi-planar images can be visualized as 3D renderings and are the basis for constructing polygonal meshes or surfaces of

specific anatomic structures of interest.

In this study, the DICOM module, in 3D Slicer software, an open-source tool, is used to open and visualize the CBCT scan images in DICOM format.16 For this purpose, a notebook with the following specification was used: Intel Inside™ Core i7, 16 GB RAM memory and 2 GB off-board video card. Firstly, in the volume rendering module, the 3D model is displayed. The preset CT-bone is selected and, in the shift option, only hard tissues are chosen to be shown. It is also possible to crop the image in the desirable region of interest (ROI).

The editor module is used to obtain a 3D representation of the hard and soft tissues in order to perform the Image Segmentation through the thresholding tool. This is useful for identifying and delineating the anatomic structures of interest in the CBCT scan, which results in the 3D volumetric label map files. Thresholding classifies a voxel (element of volume in 3D image) depending only on its intensity.17 A certain intensity range is specified with lower and upper threshold values. Each voxel belongs to the selected class (bone, for example) if, and only if, its intensity level is within the specified range. The appropriate value range has to be selected for each patient since bone density varies between patients and intensity values of bone can vary between scanners.10

After segmentation, the 3D volumetric model is created from the label map, by clicking the Model button, then it can be saved and exported as a 3D triangular or polygonal mesh (3D surface model), in .stl (STereoLithography) file format.

The next step is model simplification, aimed at simplifying the matrix of polygonal meshes, which is important for reducing models' megabytes. For this procedure, MeshLab18 open-source software is used. In this software, the models can have their megabytes reduced while the quality is well maintained in Filters > Remeshing, Simplification and Reconstruction > Quadric Edge Collapse Decimation. Also, in models from CBCT acquisition, there is a lot of noise and many artefacts that can be removed manually in Edit > Select Faces in a Rectangular Region (select in red the undesirable faces) and pressing Delete. After, the conversion of the .stl format into a volumetric format such as .obj or .fbx (FilmBoX) file can be done either to digital dental models or digitized dental plaster models. Once their format acquisition is .stl, they are simplified to a smaller file size and converted to .obj or .fbx volumetric format. The model simplification can be done in any modelling and rendering software, such as Autodesk® 3ds Max®19 or Blender 3D.20

The development of the AR project is done in the Metaio Creator21 AR software. There is an open-source version of this software that allows the creation of AR scenarios in a trackable (tracking references), using 3D models in .fbx or .obj formats. When developing the project, a simple and easy .jpeg (Joint Photographics Experts Group) image in high definition is used as the basis of the project (trackable), and the model is well positioned over it, observing the three axes for adequate location. Afterwards, this image is used as the marker. Animations, colours, shelter and written texts can be added to the model. As the project is saved, the software uploads the AR content to a cloud account and provides a Quick Response (QR) code of the project that is scanned by the free app JUNAIO22, previously downloaded in any mobile device.

3 RESULTS

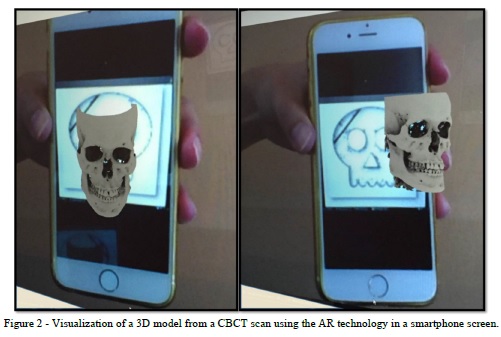

An easy and quick way to visualize 3D models from medical imaging is available on mobile devices using AR technology (figure 2). First, the JUNAIO free app must be downloaded onto a device, such as smartphones and tablets. Secondly, the device must be connected to the Internet, so the (QR) code is scanned by the app. The QR code may be printed, or scanned from a computer monitor or a mobile device screen. Third, the mobile device screen is pointed to the marker, which must be the same image used as the trackable (tracking reference) in the development of the AR project. Like the QR code, this marker may be printed or displayed on a screen or monitor.

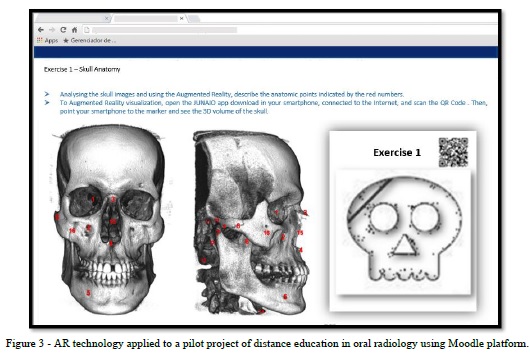

The volumetric model is then available on the mobile device screen, superimposed on the marker. The observer has the opportunity to interact with the model, turning it sideways or zooming by touching the screen. This approach facilitates the visualization of the model, giving the precise location of structures and abnormalities, such as supernumerary teeth, bone fractures and asymmetries. Also, the model is saved for future and multiple visualization. In our study, the AR technology was successfully used as part of a pilot distance course in oral radiology in Moodle platform (figure 3), where clinical cases with radiographic and tomographic images, and 3D models with AR technology were presented to students in order to turn their learning easier and more attractive.

4 DISCUSSION

Although the usage of AR in a pilot distance course in oral radiology was successful, it leads to discussions about some of the benefits and drawbacks of the technology. The advantages of AR can be classified into two parts: the advantages of AR application and the advantages in the AR creation phase. Using an AR application enables the simulation, visualization and addition of information, and interaction with virtual objects without being totally immersed in virtual life.23 In a regular or distance educational context, for example, the coexistence of virtual objects and real environments allows learners to visualize complex spatial relationships and abstract concepts,14 experience phenomena that cannot be experienced in the real world5, interact with two- and three-dimensional synthetic objects in the mixed reality24 and develop important practices and literacies that cannot be developed and enacted in other technology-enhanced learning environments.25 In this way, AR technology can

provide synthetic objects for teaching, such as anatomical parts or rare items for science laboratories. Kotranza et al. (2009)26 showed an AR system in clinical medicine that embedded touch sensors in a physical environment, collected sensor data to measure learners' performances and then transformed the performance data into visual feedback.

By using this AR system, learners could receive real-time, in situ responses that may help improve their performances and enhance their psychomotor skills in a cognitive task. Rhienmora et al. (2010)27 developed an AR dental training simulator utilizing a haptic (force-feedback) device. A number of dental procedures such as crown preparation and opening access to the pulp can be simulated with various shapes of dental drill. The system allows students to practise surgery in the correct postures as in the actual environment by combining 3D tooth and tool models in the real-world view and displaying the result through a video see-through head-mounted display. Qu et al. (2015)28 presented a navigation technique that can display 3D images of the mandible with a designed cutting plane on surgical sites, based on AR, by providing a surgical guide for the transfer of the osteotomy lines and the positions of the screws. Espejo-Trung et al. (2015)29 developed a new learning object based on AR models in order to teach preparation design of gold onlay at a dental school in Brazil with a high index of acceptance among students.

Publishing companies of health articles and book chapters can have their publication experience enhanced when providing AR 3D volumes for worldwide readers, as printed pages limit the number of images displayed and they are only seen in 2D version. With AR technology, volumes are easily accessed by readers on their own tablet or mobile phone, by scanning QR codes.

The AR creation process is less expensive than that of virtual reality (VR); this is considered one of the most important advantages of AR. This process can be done on any computer or notebook with a 2 GB off-board video card and 8 GB RAM memory or more, using free software. In addition, the models can be visualized and easily manipulated on any mobile device with default settings.

It is important to bear in mind that for the construction of 3D volumes, the patient's examination already requested by the dentist or physician is used. In order to prepare 3D volumes, data coming from different imaging modalities can be used, such as images from CBCT, CT and MRI, or digital dental models. The same image processes are applicable and can be generalized for images acquired with any 3D imaging modality, except digital dental models and digitized plaster dental models, which are acquired in .stl extension instead of DICOM. DICOM files can be opened and visualized in any 3D image analysis software of choice.

Until now, the drawbacks of AR have been some weak points in the technology starting from the time consumption, rendering quality and storage in cloud space.

The time consumption is an important disadvantage related to the AR creation process due to the time spent eliminating the artefacts from the 3D model in a rendering software. CBCT images show more noise than multislice CT images, and the presence of metal parts enhances the artefacts. Another obstacle is the need to learn how to use a variety of software, one for each step. Depending on the computer configuration, the rendering time may be too long and the quality may not be ideal. Despite the time spent, once the model is ready it can be displayed many times for many people all around the world.

In terms of rendering quality, the main limitation of thresholding is that it is artefact-prone. These artefacts are created because different densities within a voxel are averaged and then represented by a single CBCT number. Therefore, the CBCT numbers of thin bony walls will tend to drop below the thresholding range of bone because their density is averaged with that of the surrounding air. This effect causes artificial holes in 3D reconstructions.30 In a mandible, which presents thick and dense bone, the segmentation works well. However, it fails for thin bones as the condyles and labial surfaces of the teeth. So, the morphology and position of the condyles and internal surfaces of the ramus and maxilla are critical for diagnosis, which requires a careful segmentation. Another source of artefacts is the presence of metallic material in the face (orthodontic appliances, dental fillings, implants, surgical plates). Metal artefact intensity values fall into the thresholding range of bone and are included in CBCT images as pronounced "star-like" streaks. There is no standard segmentation method that works equally well for all software.10

Regarding CBCT images, the 3D models quality may be influenced by several parameters such as scan field, voxel size and segmentation threshold selections. The previous studies recommend the use of small scan fields and a small voxel size to optimize quality of 3D model.31,32

The greatest disadvantage of the AR creation process is the storage in cloud space. Using an open-source software, the space is limited to 100 MB. This limitation hinders the creation of more sophisticated models, since models from DICOM files are heavy even after the simplification step. Partnerships with commercial AR software can increase the quality of the models and add possibilities such as changing colors and including animations.

4 CONCLUSION

As has been shown, AR application is a new perspective in dentistry although it is in an early phase. It can be created by integrating multiple technologies and has great potential to support learning and teaching, and improve how 3D models from medical images are seen. Further studies are necessary to develop easier ways to create the volumes and make the best use of them in Dentistry, including in educational and commercial environments.

ACKNOWLEDGEMENT

Authors acknowledge the support received from Visualization, Interaction and Simulation Laboratory (L-VIS) of the University of Brasília in the development of Augmented Reality projects.

REFERENCES

1. Craig A. Augmented reality applications Understanding augmented reality. Boston: Morgan Kaufmann; 2013. p. 221–54.

2. Martín-Gutiérrez J, Fabiani P, Benesova W, Meneses M, Mora C. Augmented reality to promote collaborative and autonomous learning in higher education. Comput Human Behav 2015; 51:752-61.

3. Abe Y, Sato S, Kato K, Hyakumachi T, Yanagibashi Y, Ito M, et al. A novel 3D guidance system using augmented reality for percutaneous vertebroplasty. J Neurosurg Spine 2013; 19(4):492-501.

4. Bronack S. The role of immersive media in online education. J Contin Higher Educ 2011; 59(2):113-7.

5. Klopfer E, Squire K. Environmental detectives: the development of an augmented reality platform for environmental simulations. Educ Technol Res Dev 2008; 56(2):203-28.

6. Azuma R. A survey of augmented reality presence: teleoperators and virtual reality. Environments 1997; 6(4):355-85.

7. Graham R, Perriss R, Scarsbrook A. DICOM demystified: a review of digital file formats and their use in radiological practice. Clin Radiol 2005; 60:1133-40.

8. Scarfe W, Farman A, Sukovic P. Clinical applications of cone-beam computed tomography in dental practice. J Can Dent Assoc 2006;72(1):75-80.

9. Macleod I, Heath N. Cone-beam computed tomography (CBCT) in Dental practice. Dental Update. 2008;35:590-8.

10. Cevidanes L, Ruellas A, Jomier J, Nguyen T, Pieper S, Budin F, et al. Incorporating 3-dimensional models in online articles. Am J Orthod Dentofac Orthop 2015; 147(5 (Suppl)):S195-204.

11. Kawamata A, Ariji Y, Langlais R. Three- dimensional computed tomography imaging in dentistry. Dent Clin North Am 2000; 44:395-410.

12. Roussou M. Learning by doing and learning through play: an exploration of interactivity in virtual environments for children. ACM Computers in Entertainment 2004;1(2).

13. Steuer J. Defining virtual reality: dimensions determining telepresence. J Commun 1992; 42(4):73–93.

14. Arvanitis T, Petrou A, Knight J, Savas S, Sotiriou S, Gargalakos M, et al. Human factors and qualitative pedagogical evaluation of a mobile augmented reality system for science education used by learners with physical disabilities Pers Ubiquit Comput 2009; 13(3):243–50.

15. Pan Z, Cheok A, Yang H, Zhu J, Shi J. Virtual reality and mixed reality for virtual learning environments Comp Graph 2006; 30(1):20–8.

16. 3DSlicer. 3D Slicer. Available at: http://www.slicer.org: 3Dslicer.org; 2015 [cited 2015 October 28].

17. Chapuis J. Computer-Aided Cranio-Maxillofacial Surgery. Swiss: University of Bern; 2006.

18. MeshLab. MeshLab Available at: http://meshlab.sourceforge.net. 2015 [cited 2015 November 11].

19. 3dsMax. Autodesk 3ds Max. Available at: http://www.autodesk.com.br/products/3ds-max/overview.: autodesk.com.br. 2015 [cited 2015 October 15].

20. 3DBlender. Home of the Blender Project. Available at: https://www.blender.org/.: blender.org; 2015 [cited 2015 October 23].

21. MetaioCreator. Metaio The Augmented Reality Company. Available at: https://www.metaio.com/ 2015 [cited 2015 October 17].

22. Metaio. Metaio releases junaio 2.0 for App Store. In: Metaio, editor. junaio-20-now-in-the-app-store-next-generation-ar-browser/ Metaio; 2010.

23. Diggins D. ARLib: A Cþþ augmented reality software development kit. United Kingdom: Bournemouth University; 2005.

24. Kerawalla L, Luckin R, Seljeflot S, Woolard A. "Making it real": exploring the potential of augmented reality for teaching primary school science. Virtual Reality. 2006;10(3):163–74.

25. Squire K, Jan M. Mad city mystery: developing scientific argumentation skills with a place-based augmented reality game on handheld computers. J Sci Educ Technol 2007; 16(1):5-29.

26. Kotranza A, Lind D, Pugh C, Lok B. Real-time in-situ visual feedback of task performance in mixed environments for learning joint psychomotor-cognitive tasks. 8th IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Orlando, FL 1109/ISMAR20095336485. 2009:125-34.

27. Rhienmora P, Gajananan K, Haddawy P, Dailey M, Suebnukarn S. Augmented reality haptics system for dental surgical skills training VRST '10 Proceedings of the 17th ACM Symposium on Virtual Reality Software and Technology ACM 2010. 2010:97-8.

28. Qu M, Hou Y, Xu Y, Shen C, Zhu M, Xie L, et al. Precise positioning of an intraoral distractor using augmented reality in patients with hemifacial microsomia. J Craniomaxillofac Surg 2015; 43:106-12.

29. Espejo-Trung LC, Elian SN, Luz MAAC. Development and application of a new learning object for teaching operative dentistry using augmented reality. J Dent Educ 2015; 79(11):1356-62.

30. Hemmy D, Tessier P. CT of dry skulls with craniofacial deformities: accuracy of three-dimensional reconstruction. Radiology 1985; 157(1):113-6.

31. Hassan B, Souza PC, Jacobs R, Berti SA, van der Stelt P. Influence of scanning and reconstruction parameters on quality of three-dimensional surface models of the dental arches from cone beam computed tomography. Clin Oral Investig 2010; 14(3):303-10.

32. Matta RE, von Wilmowsky C, Neuhuber W, Lell M, Neukam FW, Adler W, et al. The impact of different cone beam computed tomography and multi-slice computed tomography scan parameters on virtual three-dimensional model accuracy using a highly precise ex vivo evaluation method. J Craniomaxillofac Surg 2016;44(5):632-6.

Correspondência para:

Correspondência para:

Glaucia Nize Martins Santos

SQN 403, bloco O apto 101

Asa Norte 70835-150

Brasília, DF, Brazil

e-mail: nize.gal@gmail.com